Encoding 101 - Part 1

So you think text is simply text. Well, think again. In this series of blog posts we shall descend to the byte level, study how text is actually represented by computers, and discuss how this impacts your integration solutions.

What is encoding?

Encoding is the way a computer stores text as raw binary data. In order to read text data properly, you have to know which encoding was used to store it, and then use that same encoding to interpret the binary data in order to retrieve the original text. “That doesn’t sound so bad”, I hear you say, “surely there are just a couple of different encodings, and surely all text data contains information about which encoding it uses, right?” Well, the answers to those questions are unfortunately “No” and “No”, respectively, which is why encoding can be such a nightmare to deal with for developers.

What is text?

What text actually is depends on the context. When stored somewhere, or in transit somewhere for that matter, text is simply a lump of binary data, the same as any other kind of data. At the most basic level, it is a long row of zeroes and ones. When it is being actively worked on by a computer it is still binary data, but it is interpreted by the system as individual characters, and in many cases converted into another binary representation while it is being processed. This representation is called Unicode.

A brief introduction to Unicode

Back in 1988 digital data processing was becoming more and more prevalent, but the market was still extremely fragmented, with every supplier using their own proprietary non-standardized solutions for most things. As a result, inter-compatibility between different computer systems was virtually non-existent, and sending data from one system to another was often extremely challenging. At this time, an attempt was made to try and stem the flow of emerging encoding problems, by introducing a standardized common character set known as Unicode. This way, it was reasoned, all the different encodings in use could at least be mapped to a common set of characters, so there would never again be any doubt as to which character a given code was supposed to represent.

From the Wikipedia article for Unicode:

Unicode, formally The Unicode Standard, is a character encoding standard maintained by the Unicode Consortium designed to support the use of text in all of the world's writing systems that can be digitized. Version 16.0 of the standard defines 154998 characters and 168 scripts used in various ordinary, literary, academic, and technical contexts.

Many common characters, including numerals, punctuation, and other symbols, are unified within the standard and are not treated as specific to any given writing system. Unicode encodes 3790 emoji, with the continued development thereof conducted by the Consortium as a part of the standard.

…

At the most abstract level, Unicode assigns a unique number called a code point to each character. Many issues of visual representation—including size, shape, and style—are intended to be up to the discretion of the software actually rendering the text, such as a web browser or word processor.

The Unicode character set is not an encoding itself but is merely a standardized set of all characters that anyone could feasibly expect to encounter in a data file somewhere. The Unicode standard contains a number of actual encodings as well. Common to all of these, as opposed to most other forms of text encoding, is that they support the entire Unicode character set.

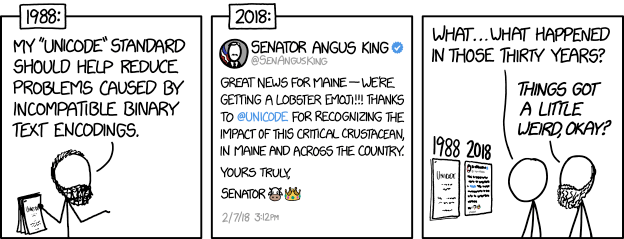

XKCD 1953 - The history of Unicode

While Unicode did alleviate some of the problems inherent in having a plethora of co-existing character encodings, it did not solve all of them. For one thing, adoption of the accompanying encoding systems was slow, and is still far from universal to this day. For another, while having a common character set to map encodings to was certainly helpful, it did not change the unfortunate fact that many types of textual data do not contain any information about which encoding system was used to produce them.

So how does encoding work?

Right, let’s get down into the nitty-gritty details. When you save a text-based file, what is actually stored? To begin with, we will look at one of the oldest and simplest encodings, ASCII. Here is an excerpt of the Wikipedia article for ASCII:

ASCII, an acronym for American Standard Code for Information Interchange, is a character encoding standard for representing a particular set of 95 (English language focused) printable and 33 control characters – a total of 128 code points. The set of available punctuation had significant impact on the syntax of computer languages and text markup. ASCII hugely influenced the design of character sets used by modern computers; for example, the first 128 code points of Unicode are the same as ASCII.

ASCII encodes each code-point as a value from 0 to 127 – storable as a seven-bit integer. Ninety-five code-points are printable, including digits 0 to 9, lowercase letters a to z, uppercase letters A to Z, and commonly used punctuation symbols. For example, the letter i is represented as 105 (decimal). Also, ASCII specifies 33 non-printing control codes which originated with Teletype devices; most of which are now obsolete. The control characters that are still commonly used include carriage return, line feed, and tab.

As ASCII was developed in the US, and is based on the English alphabet, it only contains the standard English characters. This means that text containing non-English characters (such as accented letters, or special letters used in other languages) cannot be accurately encoded in ASCII, without changing the special characters to English standard ones. ASCII was designed using 7-bit codes to represent the characters it encoded, but because all modern computers use bytes (8 bits) as their smallest memory unit, ASCII characters are now stored using 8 bits per character, and the first bit is simply not used.

The entire ASCII encoding standard looks like this:

Let us look at some example texts to see how these would be encoded in the ASCII standard. Since it quickly becomes meaningless to write out the binary representations of longer texts in full, we will use hexadecimal notation for the binary data.

When you open an ASCII encoded text file in a text editor, the program reads each byte of the file and looks up the value in an ASCII table to determine which character to show the user for that byte.

ASCII is a very limited encoding though. It only contains 95 printable characters, and as such can only be used to encode those characters. If you have textual data that contains more characters than those, you will have to use another encoding.

Those are the basics of how encoding works. In the next part of the series, we look at some different encodings, and how they differ from one another.