The MS Office character replacement problem

The MS Office suite has been responsible for major productivity boosts over the years. However, it can also play merry hell with integration solutions. Let us look at the reasons why.

I’m sorry, the what problem?

Yes, it is a bit of a mouthful. Let me start by explaining what it is that MS Office actually does, and later we’ll get to why it can become a problem.

The MS Office suite has a feature which can be disabled, but is switched on by default. What this feature does is to replace certain characters you type with other characters. There are many such replacements but in this article, I shall be focusing on the three of them which I have most frequently seen to cause problems.

These are the three replacements:

So, why is this a problem? Since all Office is doing is replacing some Unicode characters with other Unicode characters, this should not cause any issues, should it? No, it shouldn’t, not on its own, but there are more factors at play here. In order to understand the issue, we need to first have a look at two of the common encodings in use today, Windows-1252 and ISO-8859-1, and then go back in time a bit and look at how these have been used historically.

Two encodings: Windows-1252 and ISO-8859-1

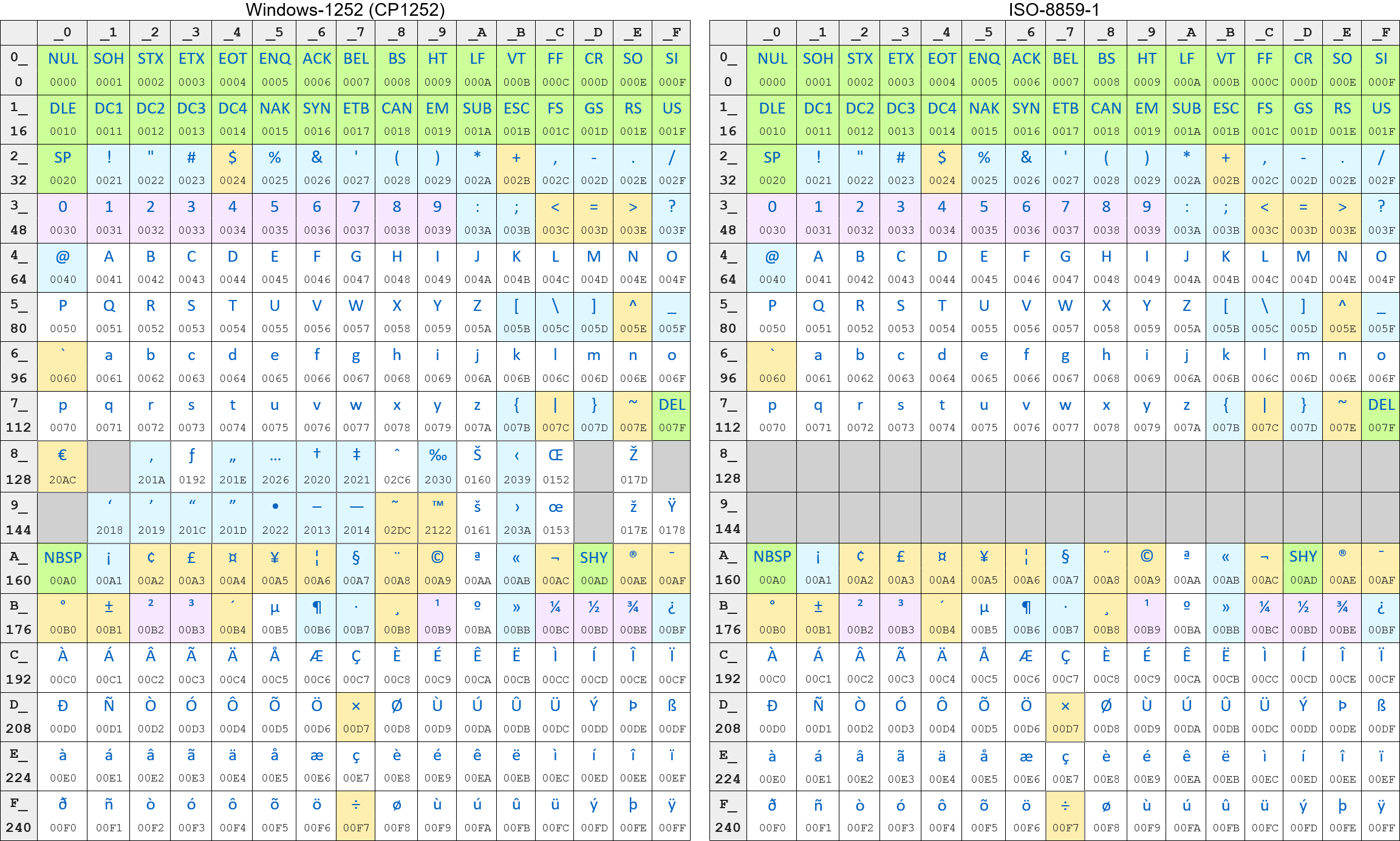

Let us first have a look at the two encodings:

As can be seen, Windows-1252 and ISO-8859-1 are mostly identical. However, Windows-1252 contains several additional printable characters in the 80-9F range which are not featured in ISO-8859-1. Among these are the four mentioned above, U+2013, U+2026, U+201C and U+201D.

A brief history lesson

What is certain is that historically, data encoded using Windows-1252 has often been labelled as using the ISO-8859-1 encoding instead. It is of course impossible for us to know exactly why some developers choose to do certain things but given what we know of those encodings and their origins and usage, we can come up with a likely hypothesis.

In the early days of Windows, the OS was mainly used for workstations, since there wasn’t really a server version of the OS yet. As such, a lot of data was generated on Windows machines, which would at some point need to be read by non-Windows machines. Since the Windows-1252 encoding was generally not known to non-Windows systems, many programs intentionally labelled data using the Windows-1252 encoding as ISO-8859-1 instead. As an ISO standard, that encoding would be known to most systems, and given that all commonly used characters (at the time) were encoded identically in the two encodings, this would mostly allow those systems to read the data correctly.

As time went on, two things happened to make this no longer necessary. For one thing, Windows gained a lot of ground as a server OS, raising the likelihood that data produced on a Windows workstation would be processed by a Windows server. For another, support for various common encodings spread to most systems, allowing non-Windows machines to use the actual Windows-1252 encoding to decode data originating from a Windows system.

However, one well known principle is “there is nothing so permanent as a temporary solution”. When there is no pressing need to change something that seems to work well enough, even if it is technically implemented in a wrong/undesirable fashion, spending the effort to correct it is a rare occurrence. Especially if any problems derived from the implementation tends to happen far away rather than in the local system itself. As such, many programs continued to label Windows-1252 data as ISO-8859-1, even though there was no longer any reason to do so. That practice continues to some extent to this day.

In response to this, some software has taken to automatically use the Windows-1252 encoding to decode any data labelled as ISO-8859-1. At this point it has gotten so far that the official HTML5 specification explicitly states that pages labelled as ISO-8859-1 should be decoded using Windows-1252. It makes sense to do this since Windows-1252 matches every single defined character code in ISO-8859-1. This means that even if the data is not mislabeled but actually is encoded using ISO-8859-1, it will still be decoded correctly.

The current state of affairs

So where does that leave us in the present day? Well, we are in an awkward scenario, where some systems label Windows-1252 data as ISO-8859-1 while others don’t, and some systems decode data labelled as ISO-8859-1 using the Windows-1252 encoding while others don’t.

The data that gets exchanged in integration scenarios comes from many different sources. Quite often, the data in a single message comes from many different sources. Many data points are automatically generated and validated (dates, product IDs, quantities, etc.) but a lot of data is “free text” typed into a system manually by human beings.

Let us look at an example. A company has ordered a shipment of goods from a vendor. The warehouse site to which the goods need to be delivered contains several large warehouses for different types of goods. To ensure a smooth delivery, additional delivery instructions are provided for the shipping provider: Enter through “Gate C” – Unload the shipment at “Dock C7”.

These instructions were written in an email using MS Outlook and sent to the vendor. Because of the character replacement feature, even though whoever wrote it just typed normal double quotes, Outlook replaced them with the special left and right double quotes, as well as replacing the dash with the longer version, so the text contains 3 of the Windows-1252 characters from the 80-9F range. The person processing the order copied and pasted the instructions from the email into the order management system. Once the order is ready for delivery, the vendor needs to generate a freight note and send it to the shipping provider. This freight note includes the shipping instructions.

From this point onwards, several things can go wrong. Let us look at some of them in detail.

The problem rears its ugly head

Firstly, between the original sender of the data and the eventual receiver of the data, at any point in the flow where encoding or decoding takes place, there is the potential for problems if the encodings don’t match. Generally, encoding happens everywhere data is sent somewhere else, and decoding happens everywhere data is received from somewhere else. In this context “somewhere else” is not necessarily a question of geography. Several components in the same solution can potentially decode the data, manipulate it in some way (or merely extract certain values from it in order to determine what to do with it), and then re-encode it before handing it over to another component for further processing.

Scenario 1: Windows-1252 data is labelled as ISO-8859-1 by sender, receiver decodes it as ISO-8859-1

Depending on how the receiver handles the situation of encountering byte codes that do not match any printable character in the encoding it uses, the end result can vary:

Enter through âGate Câ â Unload the shipment at âDock C7â (unknown characters are interpreted as non-printable control characters, not necessarily visible in all programs)

Enter through �Gate C� � Unload the shipment at �Dock C7� (unknown characters are replaced with the Unicode invalid character glyph, or an empty square in some programs/fonts)

Enter through ?Gate C? ? Unload the shipment at ?Dock C7? (unknown characters are replaced with question marks)

Enter through Gate C Unload the shipment at Dock C7 (unknown characters removed)

[Receiver gets an error which halts the processing of the data]

Depending on what happens later in the flow, the first two results may cause further technical problems down the line. Also note that apart from the characters auto-replaced by an MS Office application, the rest of the text is absolutely fine.

For a more detailed description of how this particular kind of error plays out, please read the Encoding 101 series of blog posts.

Scenario 2: Data is received correctly, but has to be encoded as ISO-8859-1 for the next receiver

This is a different kind of problem, and not one with an easy solution. Since the receiver expects data encoded as ISO-8859-1, that means that they can only receive those characters that are included in that encoding. What to do then, when you have data to send which contains characters that cannot be encoded in the way the receiver requires?

As with so many other integration problems, it depends on the specific scenario. The receiver may have specific requirements for how it should be handled, but then again they might not.

Some of the issues have fairly easy solutions, albeit ones that do need custom handling. The quotation marks and the dash for instance can be easily replaced back to their “normal” counterparts, the characters they were originally typed as before Office replaced them. However, others like the ellipsis (the three dots) can cause problems.

The problem with the ellipsis in particular is that the logical replacement is the opposite of what MS Office does, that is to replace it with three dots. This means that the string which contains the character will become two characters longer (per ellipsis it contains) than it was before. This can wind up causing problems if the integration involves either positional data formats, or data fields with limited width. If the data being sent is adjusted to the known length of the field that needs to store it in the end, having the data suddenly become longer can lead to data being truncated, if not causing errors outright. I should stress that even though this seems like a far-fetched scenario, this is not a hypothetical situation. I have seen this happen in the real world.

Honorable mention: The bullet point •

Another character worth mentioning at this point (pun unintended) is the bullet point character. It is not a part of the replacement issue as such, but it resides in the same block of encodings in Windows-1252 that have no equivalent in ISO-8859-1. Because of this, it gives rise to the same type of problems as the other characters mentioned. The thing about bullet points is that while they do not occur as frequently in general, there are a few specialized scenarios where they do occur very often.

Case in point: Product descriptions. Look at any digital storefront and examine the descriptions of the various products. They very often contain one or more bullet lists of features, specifications, usage scenarios, etc. Product data, including the descriptions, are frequently exchanged between systems, so it is a good idea to keep this in mind when dealing with product data in an integration.

Is this always a problem?

No, it isn’t. In most cases everything works just fine, even though these characters crop up in integration data. It is only in specific scenarios, such as the examples outlined above, that the problem manifests itself.

The thing is though, that an integration can be running flawlessly for years and then suddenly succumb to this issue, for instance if a vendor sending product data through the integration starts selling products from a new manufacturer whose Product Information Management (PIM) system uses these characters in their product descriptions. Also, it is worth keeping in mind that some of the integrations that work fine with these characters today only do so because they ran into the problem at some point in the past and had to be updated to account for it.

What can we do about this?

As mentioned earlier, the official HTML5 specification mandates using the Windows-1252 encoding to interpret pages labelled as ISO-8859-1. This means that any HTML5 compliant web browser will not suffer from this problem. As such, for web pages the problem has now been handled permanently.

Unfortunately, the same cannot be said for integration solutions. A web browser only has to decode a page for a user to read, but an integration solution has to interpret the incoming data correctly, and then re-encode it for other software to interpret later on. In a solution that is coded manually from the bottom up, you can of course take this issue into account and simply do what web browsers do. However, most integration solutions are based on some sort of framework (or several frameworks), which adhere to other official standards that do not incorporate the “bait-and-switch” approach that HTML5 does, and which are not easily overridden.

What we can do is to try and make sure that the systems we use export and import data using Unicode compliant encodings, preferably UTF-8. Being Unicode compliant, UTF-8 supports the entire Unicode character set, rather than only a small subset of those characters, as Windows-1252 and ISO-8859-1 do. Additionally, UTF-8 is nearly universal (as of January 2025, 98.5% of all web pages use UTF-8) and is not subject to the same sort of encoding confusion that exists between Windows-1252 and ISO-8859-1.

Apart from that, we should also strive to use data formats that include information about their encoding, such as XML or EDIFACT.

However, we do not always have the luxury of being able to choose how data should be exchanged, and often we have to make do with the way things are. There are still many old systems out there that only support older encodings and formats, not to mention newer systems coded by people with a less than stellar understanding of such matters.

Ultimately, the most important thing we can do is to know about these issues. It means that we can deliberately avoid them in some cases, and that when we do encounter them, we can recognize them for what they are. Knowing is half the battle. This enables us to act more quickly, and to better be able to find a solution.